Nicole Rigillo is a digital scholarship research fellow at the University of Edinburgh and a visiting scholar at the Indian Institute of Management in Bangalore. She will begin a fellowship at the Berggruen Institute in January 2019.

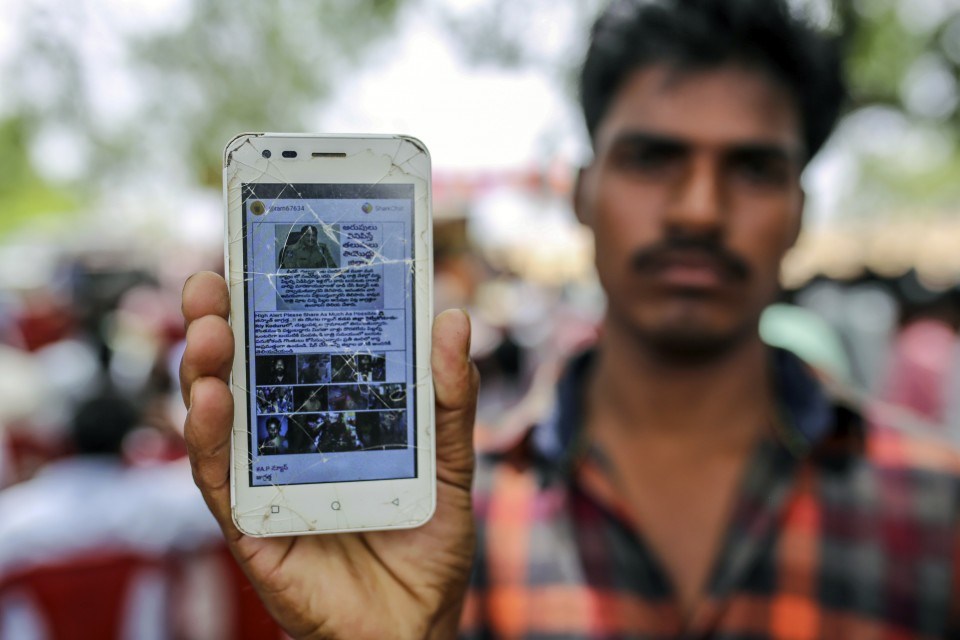

BANGALORE, India — In July, WhatsApp made strides to counter the spread of misinformation after rumors of child kidnapping in its chat groups led to mob lynchings in India, one of the platform’s biggest markets. A full 75 percent of Indians use WhatsApp’s “groups” function, which allows for real-time communication and content sharing among up to 256 people at once. It is through these groups that the baseless accusations of child kidnapping went viral.

To address this problem, WhatsApp, which is owned by Facebook, began to flag forwarded messages with an explicit “forwarded” label to clarify when a text had not originated from the sender. It also attempted to slow down forwarding in India by limiting the number of contacts to whom a message could be sent to only five at a time. The aim of WhatsApp’s changes was to “help keep the platform the way it was designed to be: a private messaging app.”

Unfortunately, WhatsApp’s efforts will do little to stem the flow of false news as it fails to address the unique nature of its platform. It is not considered typical social media, as its platform is not public. Its content lies in a social media black box also known as “dark social” — a term coined by the journalist Alexis Madrigal to describe social sharing that occurs entirely outside of what can be measured by web analytics platforms. This means that users cannot report inappropriate posts to WhatsApp by flagging a message. They must take screenshots of the message and share them with WhatsApp since the platform’s end-to-end encryption prevents the company from viewing the original posts and removing the content; it can only ban users.

While this encryption technology might afford WhatsApp users privacy, its global and widespread use allows false news to spread rapidly with little to no oversight. A 2018 study by the Reuters Institute for the Study of Journalism suggests that dark social sharing will only continue to grow as social media users worldwide seek more private spaces to communicate.

If WhatsApp wants to maintain encryption while avoiding outbreaks of violence, it needs to make viral social content visible within the public sphere. WhatsApp has already partnered with news groups in Mexico and Brazil to create fake news-busting organizations to track and counter viral content. But this is unlikely to work fast enough to stop dangerous rumors like those in India from claiming lives. Enormous resources would be required to scale up similar efforts across India, where WhatsApp content circulates among a fast-growing user base of 200 million, possibly in each of the country’s 22 official languages.

One solution to this, as Himanshu Gupta, who works at an Indian tech startup, and scholar Harsh Taneja have recently suggested, is for WhatsApp to build a crowdsourced system managed by human moderators to monitor problematic content that users forward to them. This would provide the company access to messages without breaking encryption within the platform. WhatsApp could then cross-reference decrypted messages using its own metadata to monitor dark social sharing, flag false content or even purge such content entirely from the platform. This would not require making WhatsApp forwards publicly available online, since doing so might amplify the circulation of dangerous content.

But a “forwarding bank” of this nature would make dark social content visible to WhatsApp alone, excluding other important stakeholders such as law enforcement and watchdog groups. To avoid information asymmetry and to encourage action, groups working in the public interest should also have access to forwarding banks. Government and law enforcement agencies could then monitor trending dark social content by region and remain alert to the potential for violence. Journalists and fake-news busters could follow up on leads and challenge misinformation. Researchers could track trends in content sharing and monitor how government and police are acting on messages received from forwarding banks.

The right to private communication is a good worth protecting. But without adequate oversight by a wider range of stakeholders, mass sharing over dark social media platforms is likely to cause profound social disruption, particularly as deepfake technology, such as manipulated videos powered by artificial intelligence, further blur the distinction between what is real and fake.

In the interest of the public good, WhatsApp needs to lead the development of technological infrastructures to bring its dark social into the light. At the moment, dangerous messages only surface in the public sphere when they are transformed into collective violence. And by then, it’s already too late.

This was produced by The WorldPost, a partnership of the Berggruen Institute and The Washington Post.